Deploying a Python Flask application on Knative

To understand Knative, let’s try to build and deploy a Flask application that outputs the current timestamp in the response. Let’s start by building the app.

Building the Python Flask app

We will have to create a few files to build such an app.

The app.py file looks like this:

import os

import datetime

from flask import Flask

app = Flask(__name__)

@app.route(‘/’)

def current_time():

ct = datetime.datetime.now()

return ‘The current time is : {}!\n’.format(ct)

if __name__ == “__main__”:

app.run(debug=True,host=’0.0.0.0′)

We will need the following Dockerfile to build this application:

FROM python:3.7-slim

ENV PYTHONUNBUFFERED True

ENV APP_HOME /app

WORKDIR $APP_HOME

COPY . ./

RUN pip install Flask gunicorn

CMD exec gunicorn –bind :$PORT –workers 1 –threads 8 –timeout 0 app:app

Now, let’s go ahead and build the Docker container using the following command:

$ docker build -t <your_dockerhub_user>/py-time .

Now that the image is ready, let’s push it to Docker Hub by using the following command:

$ docker push <your_dockerhub_user>/py-time

As we’ve successfully pushed the image, we can run this on Knative.

Deploying the Python Flask app on Knative

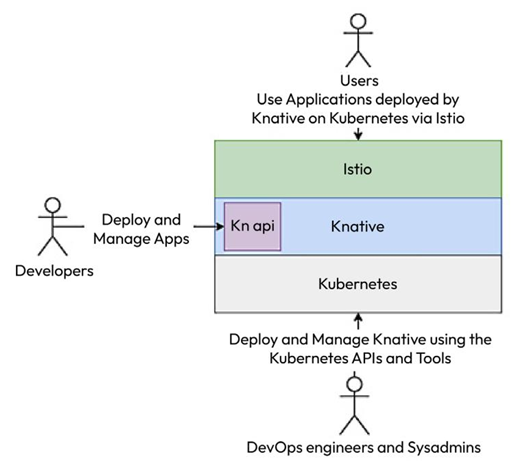

We can use the kn command line or create a manifest file to deploy the app. Use the following command to deploy the application:

$ kn service create py-time –image <your_dockerhub_user>/py-time

Creating service ‘py-time’ in namespace ‘default’:

9.412s Configuration “py-time” is waiting for a Revision to become ready.

9.652s Ingress has not yet been reconciled.

9.847s Ready to serve.

Service ‘py-time’ created to latest revision ‘py-time-00001’ is available at URL:

http://py-time.default.35.226.198.46.sslip.io

As we can see, Knative has deployed the app and provided a custom endpoint. Let’s curl the endpoint to see what we get:

$ curl http://py-time.default.35.226.198.46.sslip.io The current time is : 2023-07-03 13:30:20.804790!

We get the current time in the response. As we already know, Knative should detect whether there is no traffic coming into the pod and delete it. Let’s watch the pods for some time and see what happens:

| $ kubectl get pod -w | ||||

| NAME | READY | STATUS | RESTARTS | AGE |

| py-time-00001-deployment-jqrbk | 2/2 | Running | 0 | 5s |

| py-time-00001-deployment-jqrbk | 2/2 | Terminating | 0 | 64s |

As we can see, just after 1 minute of inactivity, Knative starts terminating the pod. Now, that’s what we mean by scaling from zero.

To delete the service permanently, we can use the following command:

$ kn service delete py-time

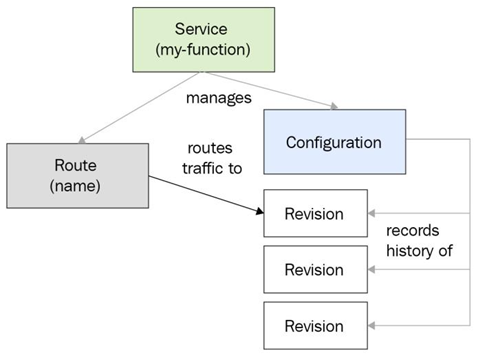

We’ve just looked at the imperative way of deploying and managing the application. But what if we want to declare the configuration as we did previously? We can create a CRD manifest with the Service resource provided by apiVersion—serving.knative.dev/v1.

We will create the following manifest file, called py-time-deploy.yaml, for this:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: py-time

spec:

template:

spec:

containers:

image: /py-time

As we’ve created this file, we will use the kubectl CLI to apply it. It makes deployment consistent with Kubernetes.

Note

Though it is a service resource, don’t confuse this with the typical Kubernetes Service resource. It is a custom resource provided by apiVersion serving.knative.dev/ v1. That is why apiVersion is very important.

Let’s go ahead and run the following command to do so:

$ kubectl apply -f py-time-deploy.yaml

service.serving.knative.dev/py-time created

With that, the service has been created. To get the service’s endpoint, we will have to query the ksvc resource using kubectl. Run the following command to do so:

$ kubectl get ksvc py-time

NAME URL

py-time http://py-time.default.35.226.198.46.sslip.io

The URL is the custom endpoint we have to target. Let’s curl the custom endpoint using the following command:

$ curl http://py-time.default.35.226.198.46.sslip.io The current time is : 2023-07-03 13:30:23.345223!

We get the same response this time as well! So, if you want to keep using kubectl for managing Knative, you can easily do so.

Knative helps scale applications based on the load it receives—automatic horizontal scaling. Let’s run load testing on our application to see that in action.