The AWS CLI is available as a deb package within the public apt repositories. To install it, run the following commands:

$ sudo apt update && sudo apt install awscli -y

$ aws –version

aws-cli/1.22.34 Python/3.10.6 Linux/5.19.0-1028-aws botocore/1.23.34

Installing the ECS CLI in the Linux ecosystem is simple. We need to download the binary and move to the system path using the following command:

$ sudo curl -Lo /usr/local/bin/ecs-cli \ https://amazon-ecs-cli.s3.amazonaws.com/ecs-cli-linux-amd64-latest $ sudo chmod +x /usr/local/bin/ecs-cli

Run the following command to check whether ecs-cli has been installed correctly:

$ ecs-cli –version

ecs-cli version 1.21.0 (bb0b8f0)

As we can see, ecs-cli has been successfully installed on our system.

The next step is to allow ecs-cli to connect with your AWS API. You need to export your AWS CLI environment variables for this. Run the following commands to do so:

$ export AWS_SECRET_ACCESS_KEY=…

$ export AWS_ACCESS_KEY_ID=…

$ export AWS_DEFAULT_REGION=…

Once we’ve set the environment variables, ecs-cli will use them to authenticate with the AWS API.

In the next section, we’ll spin up an ECS cluster using the ECS CLI.

Spinning up an ECS cluster

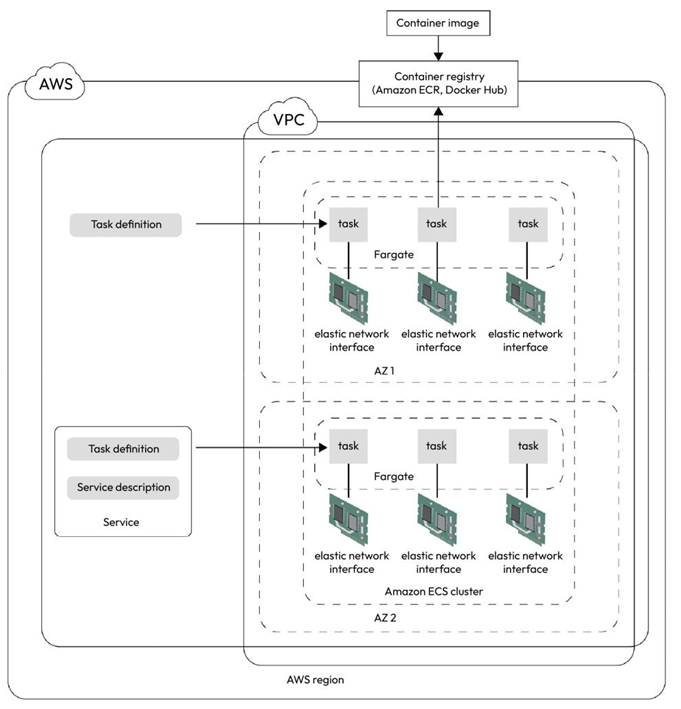

We can use the ECS CLI commands to spin up an ECS cluster. You can run your containers in EC2 and Fargate, so first, we will create a cluster that runs EC2 instances. Then, we will add Fargate tasks within the cluster.

To connect with your EC2 instances, you need to generate a key pair within AWS. To do so, run the following command:

$ aws ec2 create-key-pair –key-name ecs-keypair

The output of this command will provide the key pair in a JSON file. Extract the JSON file’s key material and save that in a separate file called ecs-keypair.pem. Remember to replace the \n characters with a new line when you save the file.

Once we’ve generated the key pair, we can use the following command to create an ECS cluster using the ECS CLI:

$ ecs-cli up –keypair ecs-keypair –instance-type t2.micro \ –size 2 –cluster cluster-1 –capability-iam

INFO[0002] Using recommended Amazon Linux 2 AMI with ECS Agent 1.72.0 and Docker version 20.10.23

INFO[0003] Created cluster cluster=cluster-1 region=us-east-1 INFO[0004] Waiting for your cluster resources to be created…

INFO[0130] Cloudformation stack status stackStatus=CREATE_IN_PROGRESS VPC created: vpc-0448321d209bf75e2

Security Group created: sg-0e30839477f1c9881

Subnet created: subnet-02200afa6716866fa

Subnet created: subnet-099582f6b0d04e419

Cluster creation succeeded.

When we issue this command, in the background, AWS spins up a stack of resources using CloudFormation. CloudFormation is AWS’s Infrastructure-as-Code (IaC) solution that helps you deploy infrastructure on AWS through reusable templates. The CloudFormation template consists of several resources such as a VPC, a security group, a subnet within the VPC, a route table, a route, a subnet route table association, an internet gateway, an IAM role, an instance profile, a launch configuration, an ASG, a VPC gateway attachment, and the cluster itself. The ASG contains two EC2 instances running and serving the cluster. Keep a copy of the output; we will need the details later during the exercises.

Now that our cluster is up, we will spin up our first task.